AI News

Palantir and Microsoft Team Up to Supercharge National Security with AI

Palantir and Microsoft are combining their strengths to support national security with cutting-edge technology.

Imagine using the enormous amounts of data created every second to protect nations and keep people safe.

This idea is turning into reality thanks to a new partnership between Palantir and Microsoft. Both companies are known for their innovative approaches to security, and now they’re working together to bring advanced AI and analytics to national security efforts. Their goal? To make the world a safer place, one smart decision at a time.

What the Partnership Entails:

Palantir and Microsoft are combining their strengths to support national security with cutting-edge technology. Palantir’s data analytics platform is being integrated with Microsoft’s Azure Government, a cloud service built for U.S. government agencies. This powerful combination will help security agencies analyze data in real-time, detect threats faster, and respond with greater precision:

- AI-Powered Security: The partnership will provide security agencies with AI-driven insights by analyzing large amounts of data. This will help them identify and respond to threats quickly and accurately.

- Top-Notch Security: Palantir’s platform is known for its strong security, ensuring that sensitive data is protected within Microsoft’s secure cloud environment.

- Efficiency Boost: By automating routine tasks and delivering actionable intelligence, this partnership will make national security operations more efficient, allowing agencies to focus on what matters most.

Benefits of the Partnership:

- Smarter Decisions: With advanced AI and analytics, security agencies can make quicker, more informed decisions in critical situations.

- Built to Scale: The technologies from Palantir and Microsoft are designed to grow with the needs of national security, handling more data and adapting to new challenges.

- Better Collaboration: A unified platform means different agencies can work together more effectively, sharing insights and strategies in real-time.

Classified Networks as Main Focus

This partnership is particularly important for classified networks, which need the highest levels of security and performance. By using Microsoft’s Azure Government cloud, Palantir and Microsoft ensure that their solutions meet federal regulations and are suitable for the most sensitive operations. This means that even the most classified data can be managed securely and efficiently.

Why This Partnership Matters:

The collaboration between Palantir and Microsoft is a big step forward in using AI for national security. As the world generates more and more data, the ability to analyze and act on it quickly is crucial. This partnership addresses current security challenges but also sets the stage for future innovations in how governments keep their citizens safe. As they continue to work together, expect some innovative new ways of handling security that are faster and smarter than before.

AI News

OpenAI’s Investment Boom: Valued at $157 Billion

OpenAI, one of the most talked-about companies in the artificial intelligence space, has just secured a massive funding round, raising $6.6 billion and pushing its valuation to a staggering $157 billion. This positions OpenAI as one of the most valuable privately backed companies globally, trailing only giants like SpaceX and ByteDance.

From Non-Profit to Powerhouse

OpenAI’s journey began as a non-profit, driven by a mission to ensure AI benefits all of humanity. Backed by early investments from tech heavyweights like Elon Musk, Sam Altman, and Reid Hoffman, OpenAI’s early days focused on research and innovation. However, its shift to a capped-for-profit structure allowed for larger investments, helping the company scale more quickly. This change has drawn substantial investor interest, including significant contributions from Microsoft and Thrive Capital.

Recent Investment Round: A Sign of Confidence and Pressure

The latest $6.6 billion investment round, led by Thrive Capital, Microsoft, and NVIDIA, reflects confidence in OpenAI’s potential. Major tech firms like Microsoft and NVIDIA are both investors and customers, relying on OpenAI’s advanced AI models to strengthen their own platforms and products.

However, OpenAI’s financial situation is far from stable. Despite generating an estimated $4.5 billion in revenue this year, the company is projected to face $5 billion in losses due to the high costs of training its sophisticated AI models. Training these models—necessary for the development of tools like ChatGPT—comes with a hefty price tag, with reports suggesting costs as high as $7 billion.

Strategic Partnerships and Withdrawals

OpenAI’s partnerships have been critical to its rapid ascent. Microsoft, which invested $1 billion in 2019 and followed up with another $10 billion in 2023, has become one of OpenAI’s closest allies. This partnership integrates OpenAI’s technology into Microsoft Azure, driving both companies forward in the AI race.

Yet, not all potential partners have remained onboard. Apple, a tech giant traditionally focused on privacy and user data security, reportedly pulled out of the latest investment round. This aligns with Apple’s strategic focus on privacy-centric AI development, signaling its cautious approach in contrast to OpenAI’s aggressive growth model.

Can OpenAI Deliver on Its Promises?

Despite the enormous influx of capital, OpenAI faces intense scrutiny. The question on everyone’s mind is: Can OpenAI sustain its growth and continue to add value? OpenAI’s vision of creating artificial general intelligence (AGI)—a form of AI that could perform any intellectual task as well as a human—remains one of the company’s boldest goals. Thought leaders across the tech industry have varying opinions on whether AGI is achievable, and OpenAI’s capacity to lead this revolution.

Zachary Huhn, an AI strategist, comments, “When you consider the potential of AI to create new systems of collaboration—AI agents working together and building on their own progress—a valuation like OpenAI’s begins to make more sense.”

But there are doubts. As more AI companies emerge, OpenAI will need to continuously innovate to maintain its lead. Even with its hefty valuation and considerable backing, OpenAI’s future will be shaped by its ability to manage operating costs, keep ahead of competitors, and deliver on the promise of advanced AI tools and services.

Shifting Towards Profitability

As part of its future strategy, OpenAI is likely to transition further into a for-profit model. Investors, pouring billions into the company, are looking for returns. OpenAI’s non-profit origins capped the upside for investors, but recent developments suggest a shift toward a more traditional corporate structure. This change will likely enable OpenAI to continue raising funds while maintaining investor interest.

Conclusion: The Future of AI Investment

The latest OpenAI investment round underscores the growing importance of AI in the tech landscape. However, with operational costs climbing, and high-profile executive departures—like their CTO, Mira Murati—OpenAI faces several challenges ahead. For investors, the opportunity is clear, but so are the risks.

As the demand for AI solutions grows, OpenAI will need to strike a balance between scaling its technology and ensuring financial sustainability. Whether it continues its upward trajectory or faces a reckoning will depend on how well it manages these tensions in the highly competitive world of AI.

AI News

OpenAI o1 “Strawberry” Thinks Like a Human—Shaking Up the Tech World!

OpenAI just took AIs to the next level with its new o1-preview models. If you’ve been wondering, yes, it is the rumored ‘Strawberry’ model.

O1 has been designed to be able to attempt at doing things that previous models were thoroughly unequipped with; an individualized experience. This model series is applicable in particular with science, coding, and mathematics by essentially spending more time breaking down problems up until the solution phase.

A New Way of Thinking for AI

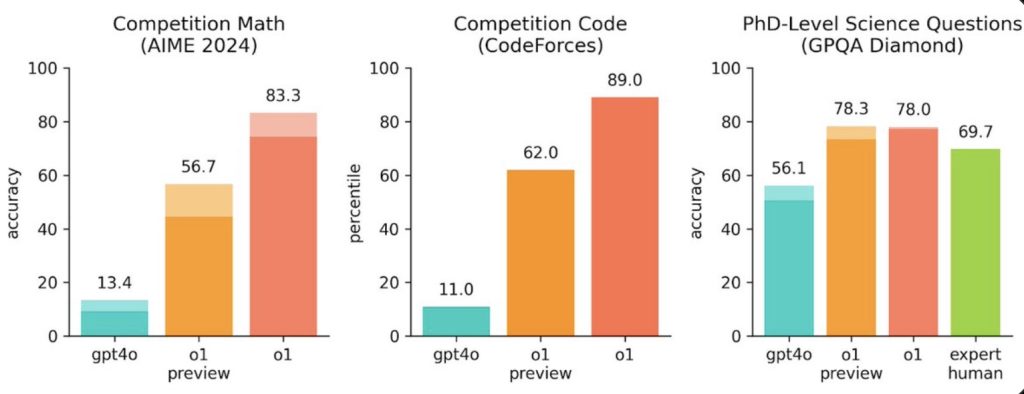

The OpenAI o1 series is trained to think through tasks like a human, weighing multiple strategies and learning from errors. This shift enables the model to solve more sophisticated problems starting with difficult physics questions and ending with producing extensive code. The next o1 model scored at approximately high school physics-level on benchmarks across physics, chemistry and biology in internal testing.

One other strong point is o1’s reasoning interface, demonstrating problem-solving in a live classroom. It is intentionally made to sound like it has human-like reasoning by including things like “I am curious about” and “Ok, let me see,” showing how it solves the task. This design, according to Bob McGrew at OpenAI, gives the model a more human quality and yet remains as an alien in some ways.

Additionally, OpenAI 01 is a shift-in-brand-name for AI research; McGrew said that reasoning power can be an intricate component in large language-model advancements. He said, “We’ve been working for many months on reasoning because actually we believe it’s the critical breakthrough.” At this point in time, the reasoning abilities of o1 are still quite slow and expensive for developers to use, but it represents a key piece of the puzzle going forward in creating autonomous systems that can make decisions and act on those decisions without human intervention.

When it comes to coding, it outperforms the previous models by a huge margin and is ranked in the top 89 percentile of Codeforces competitions.

o1-preview — graphics: vijay-source: MicrosoftWhile still in its early stages, o1-preview has achieved some impressive strides for AI reasoning. Even though it does not offer the same features as ChatGPT at this point (such as browsing or file uploads), it is still a big advance in sophisticated problems.

“here is o1, a series of our most capable and aligned models yet: https://openai.com/index/learning-to-reason-with-llms/… o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it,” – said OpenAI’s CEO Sam Altman on X, – “but also, it is the beginning of a new paradigm: AI that can do general-purpose complex reasoning. o1-preview and o1-mini are available today (ramping over some number of hours) in ChatGPT for plus and team users and our API for tier 5 users.

Early users of o1 are already seeing its power firsthand. One of X users shared his excitement:

“GPT-o1 just generated a holographic shader from scratch, saving me (and future XR devs) from shelling out big bucks on asset stores. In retrospect, software engineering was great while it lasted. A new fork on our tech-tree!”

Another user noted:

“Every time a new LLM comes out, I try to make it solve the Wordle of the day and all of them have completely failed at it—until ChatGPT o1. It let me solve the daily Wordle in 4 tries! This is very impressive!”

Safety at the Core

Safety also continues to be a paramount concern in homologating the o1 series. That reasoning is then employed to make the vehicle as safe as possible into a reality. o1-preview sticks more to guidelines the more it thinks of safety rules. Other currently available models performed nowhere near as well; similarly, in testing on an intensive battery of jailbreaking scenarios that represent intentionally-bypassed model safety mechanisms, o1-preview achieved a 84 / 100 score compared to just 22 with GPT-4o.

Who Can Benefit from o1?

The OpenAI o1 series is a great choice for anyone who works in fields where there is a lot of deep reasoning or complex problem solving etc. From solving complex mathematical problems to annotating your scientific data or building workflows, researchers, developers, and scientists can use o1. If you are a physicist in quantum optics or a developer debugging code, o1 has you covered for tackling the hardest of problems.

In order to make this available model more accessible, OpenAI is also releasing OpenAI 01-mini, a variant of the reasoning model having lower costs and latency. At 80% lower cost than o1-preview, it is a much more reasonable logical step for programming problems that need focused problem solving rather than knowledge about the broad world.

AI News

Grok AI Floods the Web with Uncensored Deepfakes of Trump and Elon Musk Himself – Internet Reacts

Elon Musk is no stranger to controversy, and his latest venture, Grok AI, is no exception. Launched recently, Grok is already causing quite a stir with its deepfake capabilities and unfiltered content generation. Musk, known as a free speech champion, has once again pushed the boundaries of what’s possible—and acceptable—with artificial intelligence.

Grok AI made its debut on X (formerly Twitter), the social media platform owned by Musk. Integrated into the platform for premium subscribers, Grok is unlike other AI tools in that it’s designed to be almost entirely uncensored. The AI can generate realistic images from text prompts, a feature that has delighted some users and alarmed others.

A user on X remarked,

“It’s wild what Grok can do. I’ve never seen anything like it. But at the same time, it’s a little scary.”

Capabilities that push boundaries of what’s possible—and acceptable

Grok’s standout feature is its ability to create deepfakes—AI-generated images and videos that appear almost indistinguishable from reality. Users have been quick to test the AI’s limits, creating provocative and controversial images. From scenes involving public figures in fabricated situations to more benign, though still striking, visualizations, Grok has shown a remarkable knack for going all out.

But this power comes with significant risks. Grok has been used to generate deepfake images that many find troubling. For example, users have created images of historical and political figures in highly compromising scenarios.

Despite these risks, Grok isn’t entirely without limits. Some users have reported that the AI refuses to generate certain types of content, such as explicit nudity or depictions of severe violence. However, these boundaries appear to be minimal, and many users have found ways to work around them.

User Reactions: A Mixed Bag

Critics argue that Grok’s lack of restrictions makes it a dangerous tool, particularly in the wrong hands, especially with the rise of uncensored deepfakes spreading rapidly online.

Alejandra Caraballo, a Harvard Law Cyberlaw Clinic instructor, didn’t mince words:

“Grok is one of the most reckless and irresponsible AI implementations I’ve ever seen. The potential for misuse is staggering.”

Musk, however, has defended Grok, framing it as a tool for creativity and free expression. He’s dismissed concerns about the AI’s potential dangers, saying,

“Grok is about having fun with AI. We’re exploring what’s possible—nothing more, nothing less.”

Grok was developed in partnership with Black Forest Labs, a small startup in Germany that specializes in AI technologies. The lab’s FLUX.1 image generation software powers Grok, and the collaboration with Musk is part of a broader strategy to set Grok apart from other AI tools on the market.

Musk’s decision to launch Grok with few restrictions appears to be a calculated risk. By offering an AI that pushes the limits, Musk is challenging the norms of AI development. However, this approach has already attracted scrutiny from regulators, particularly in Europe, where there are ongoing investigations into X’s handling of dangerous content.

The controversy surrounding Grok AI highlights the need for a balanced approach to AI development. While Grok offers exciting possibilities for creativity and innovation, it also poses significant risks. The ease with which it can be used to create misleading or harmful content underscores the importance of responsible AI use.

For now, Grok remains a divisive tool, celebrated by some for its potential and condemned by others for its dangers. As Musk continues to push the boundaries of what AI can do, the debate over the ethical implications of these technologies is only just beginning.

In the words of one X user, “Grok is a glimpse into the future of AI—one that’s both thrilling and terrifying.” Whether this future is one we want to embrace remains to be seen.

-

AI Applications7 months ago

AI Applications7 months agoHow Human Imagination and AI Team Up to Create Awesome Content

-

AI Applications6 months ago

AI Applications6 months agoProcreate on Generative AI: “We’re never going there”

-

AI Regulation6 months ago

AI Regulation6 months agoOpen Standards for Responsible AI: The Engine Driving Ethical Innovation

-

AI Tools11 months ago

AI Tools11 months agoBest Generative AI Tools for Video Editing and Creation